Why Not the Error Chart?

July 14, 2014 by James WingIn an earlier post, I introduced The Troubleshooting Chart, and how building such a chart can help you troubleshoot an issue, communicate the status, and monitor the success of a fix. In this post, I'll examine why many troubleshooters prefer error data to success data, and why I disagree.

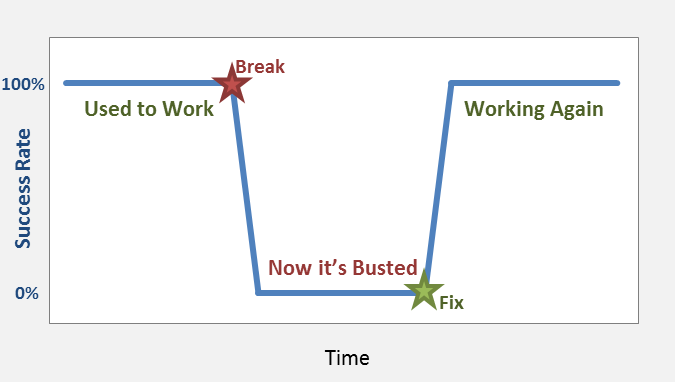

Why go through all the trouble to collect success rate data, when error data might be more readily at hand? We can simple re-envision the chart based on error rates. The original Troubleshooting Chart,

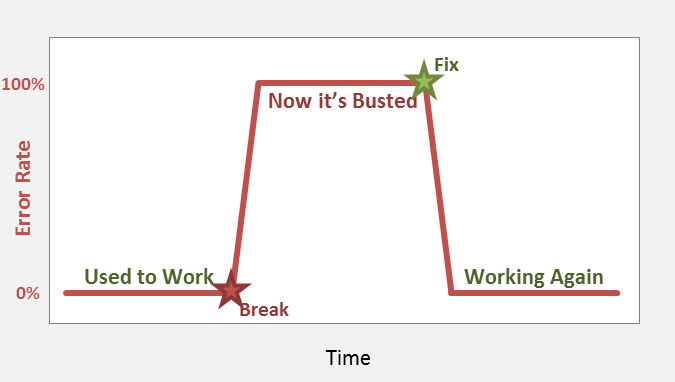

Now becomes The Error Chart:

Wouldn't that be simpler to visualize and more directly attack the incident? This is a tough argument to dismiss, and it frequently comes packaged with technical and social resistance to the success rate analysis demanded by the Troubleshooting Chart. These arguments include:

- Incidents == Errors - In a serious incident, there will certainly be related errors, and the Signal-to-Noise ratio favors error data during an incident.

- Geeks Prefer Logs - Technical people would feel more comfortable looking at error logs and error messages. Log data is by-Geek-for-Geek. In contrast, the success rate data seems like a strange and foreign exercise better suited to "Suits".

- Get to Root Cause ASAP - Examining errors is on the critical path to finding the root cause. But success rate analysis is an extra step at best, maybe even a distraction.

I've definitely fallen for this more than once. But jumping to the error logs with looking at success rate is really the same thing as the guess-and-check troubleshooting methodology mentioned in my earlier post on troubleshooting process. Of course, I will attempt to exhaustively enumerate the reasons why errors are not as good:

- Errors != Success * -1 - The presence of errors is not the same as the absence of success. Above, I argued that errors would be present in a serious incident, but that isn't always true. Some incidents, like server outages and network problems, may be accompanied by a deafening silence of error messages. There may also be cases where operations fundamentally succeed, but log errors anyways. Does the absence of errors mean that everything is working perfectly? Probably not.

- Separate Fixes - The fix for the success rate and the fix for errors might be different. This is especially true with respect to evaluating workarounds, but also true when the underlying problem is bigger than the visible errors specify -- for example, what is reported as a permissions issue is actually a network connectivity problem.

- Error Data Quality is Suspect - In my experience, success rate data is derived from operational systems with good data quality, and error data comes from logs of dubious quality. Not always, but verifying error scenarios tends to be lower on everyone's priority list than the principle success flows, so the data quality suffers.

- What's the Impact? - Errors typically don't specify impact, and customers care about impact. Errors are indeed written by-Geeks-for-Geeks, and this makes them less than ideal for explaining the magnitude of an incident to customers or "Suits" within your organization. Yes, you really should communicate with them.

- Noisy - The Signal-to-Noise ratio might not favor errors. Depending on your company's practices, your errors logs might be a constant stream of noise, rather than the concise action trigger you imagine.

Still not convinced? Well, if you must dive into the errors investigating your incident, I hope you will at least make The Troubleshooting Chart when you are finished to double-check your work. It might be a great opportunity to divide-and-conquer with a team member.

We'll take a look at the communication and coordination benefits of The Troubleshooting Chart next time.

You might also enjoy:

- Communicating with the Troubleshooting Chart

- Introducing the Troubleshooting Chart

- Data Gathering and Processing

- Belief

- Blame